Distributed Black-box Attack against Image Classification Cloud Services

Han Wu, Sareh Rowlands, and Johan Wahlstrom

Source Code

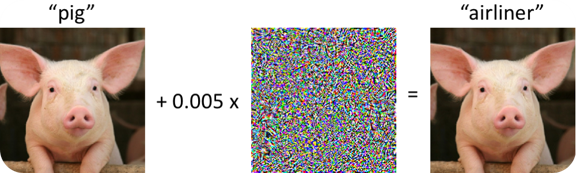

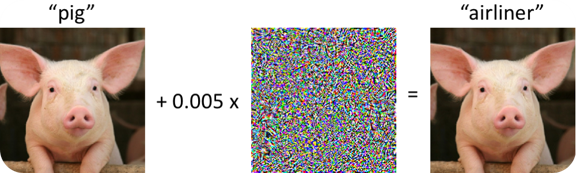

Deep Learning Models are vulnerable to Adversarial Attacks

White-box Attacks: fast and efficient.

Black-box Attacks: slow and rely on queries.

- Increasing the attack succes rate.

- Reducing the number of queries.

- Reducing the total attack time.

How to accelerate Black-Box attacks?

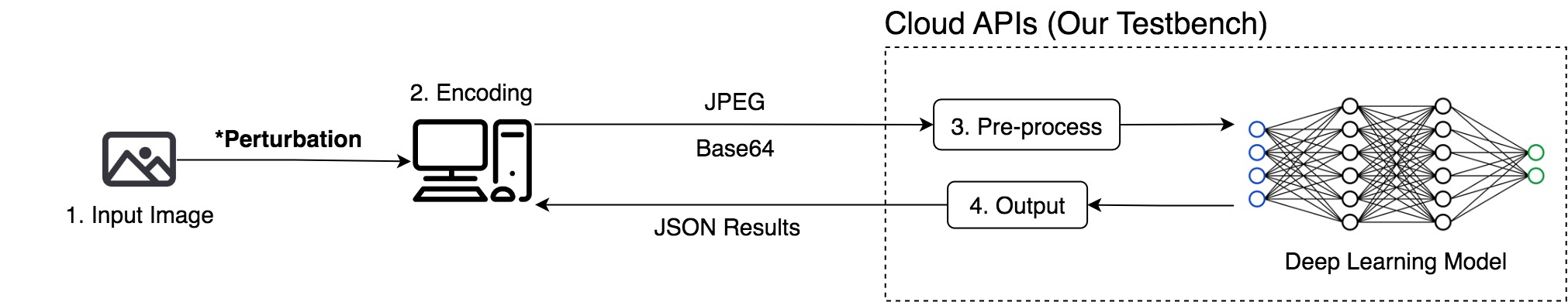

Cloud APIs are deployed behind a load balancer that distributes the traffic across several servers.

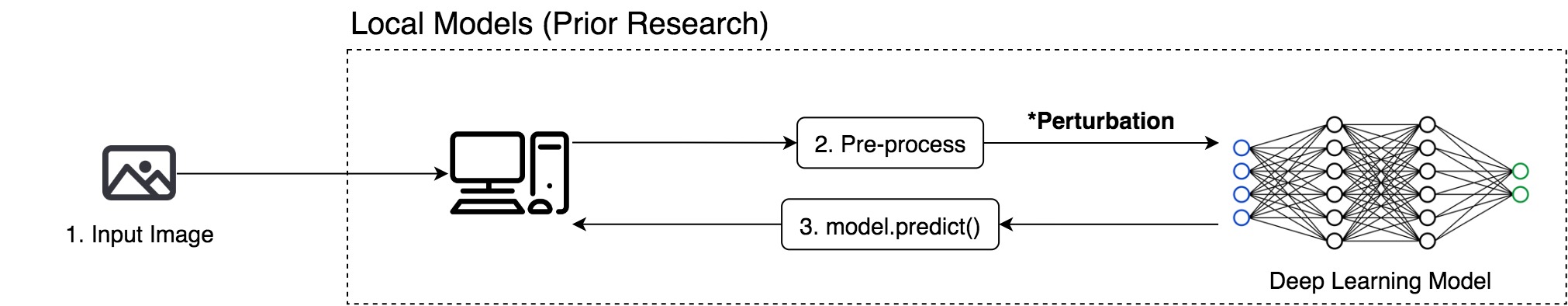

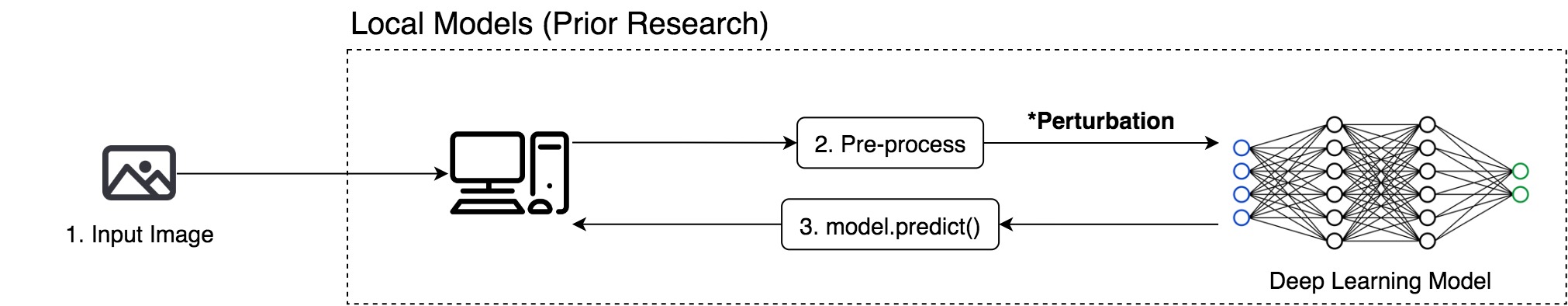

Local Models & Cloud APIs

Most prior research used local models to test black-box attacks.

We initiate the black-box attacks directly against cloud services.

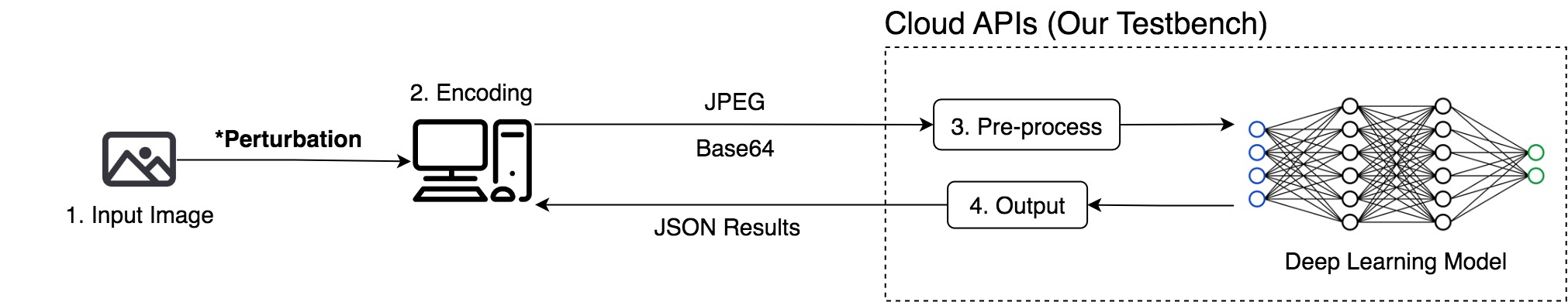

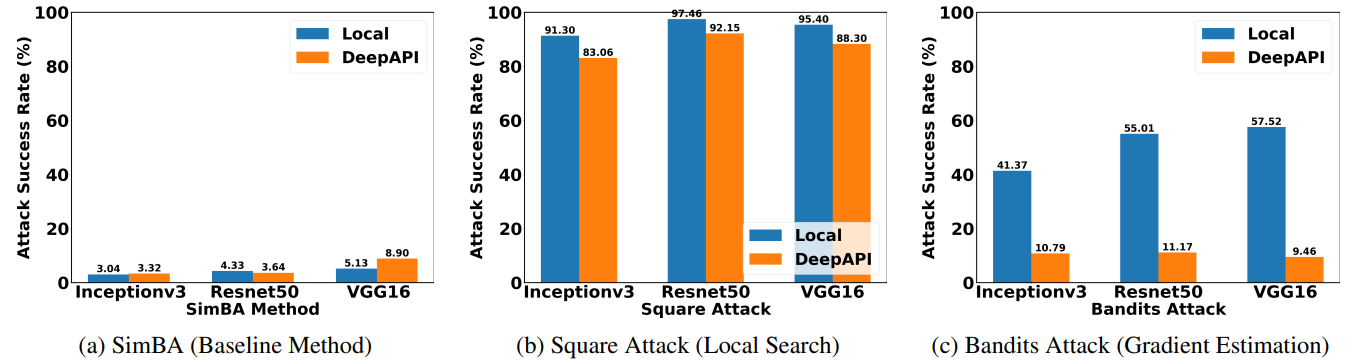

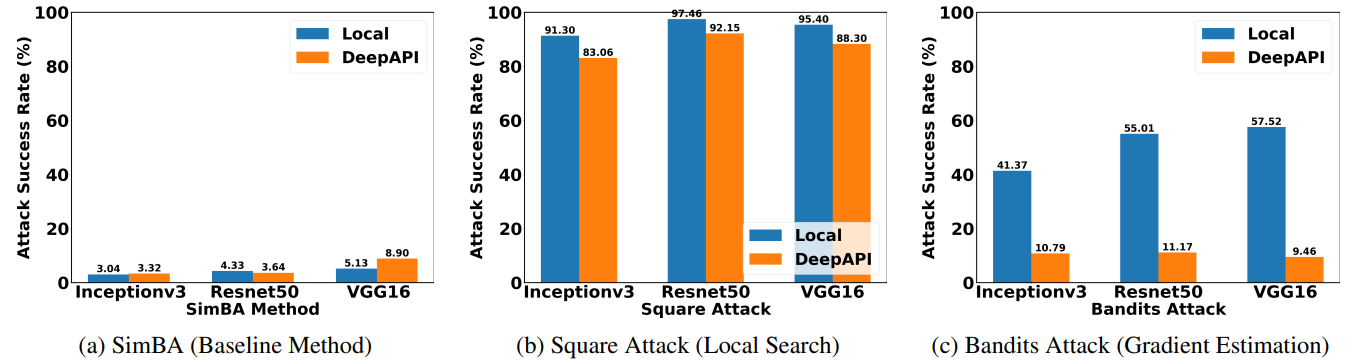

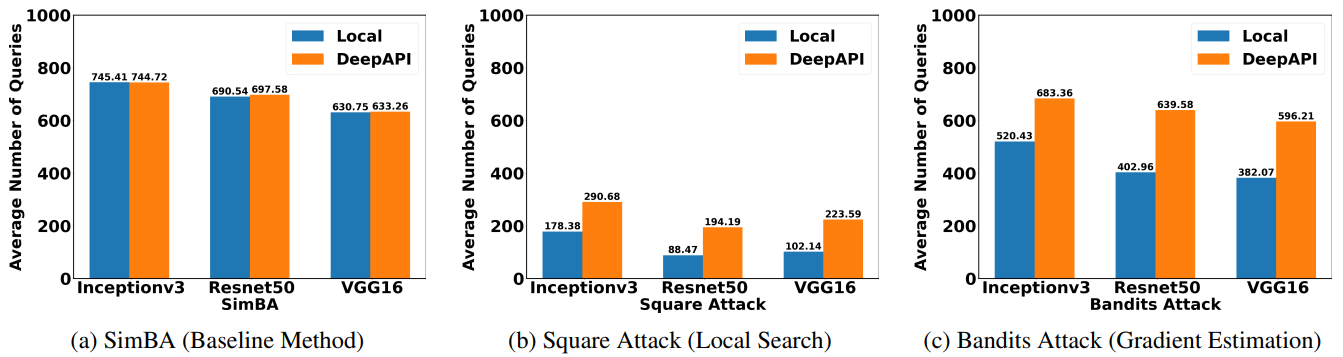

Attacking Cloud APIs is more challenging than attacking local models

Attacking cloud APIs achieve less success rate than attacking local models.

Attacking cloud APIs requires more queries than attacking local models.

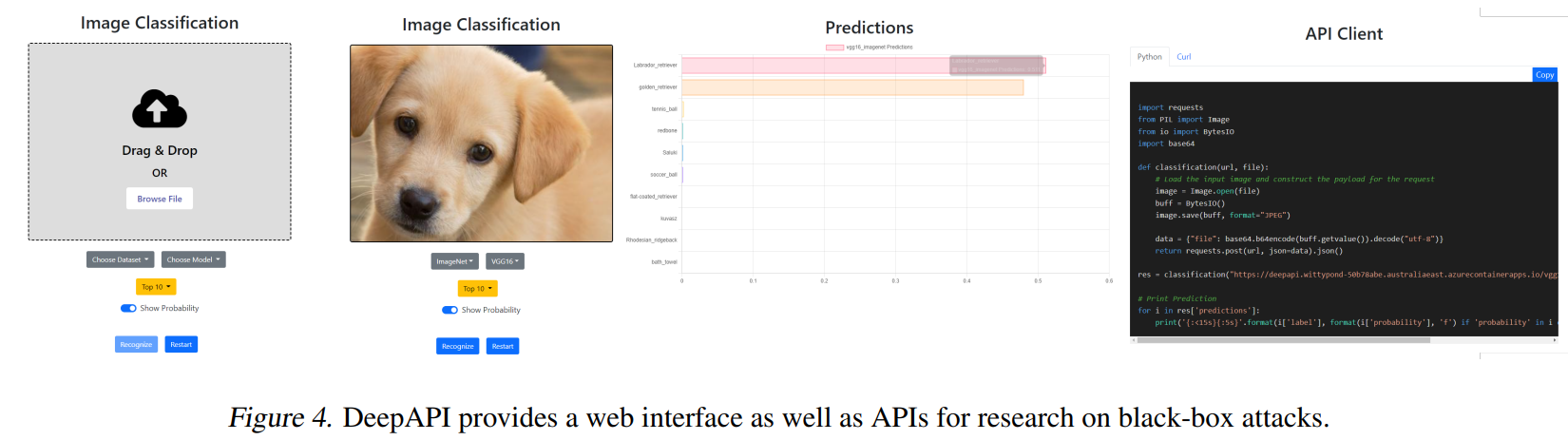

DeepAPI - The Cloud API we attack

We open-source our image classification cloud service for research on black-box attacks.

DeepAPI Deployment

Using Docker

$ docker run -p 8080:8080 wuhanstudio/deepapi

Serving on port 8080...

Using Pip

$ pip install deepapi

$ python -m deepapi

Serving on port 8080...

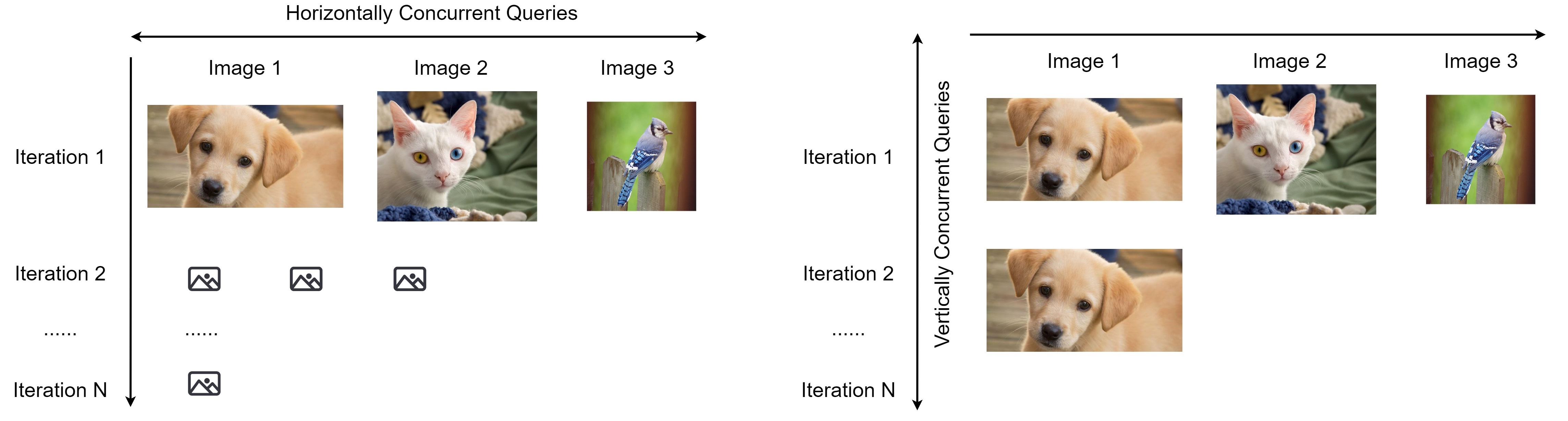

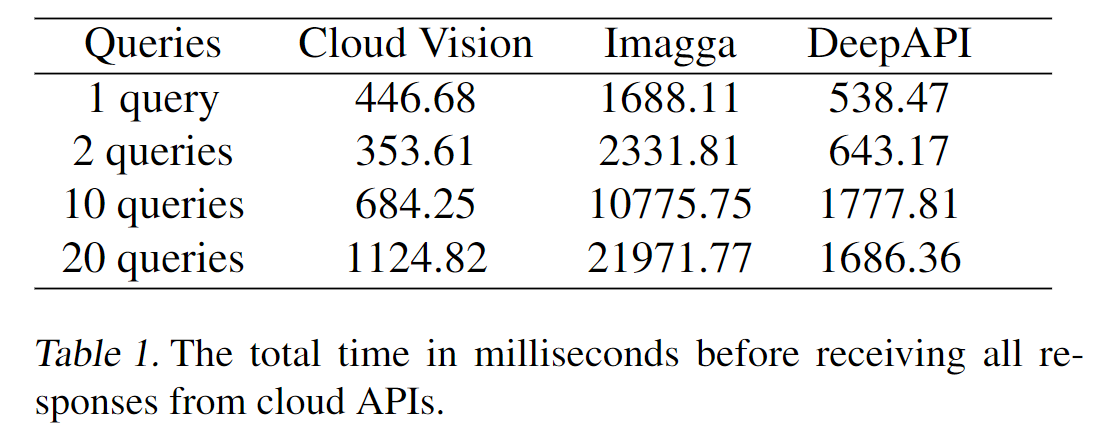

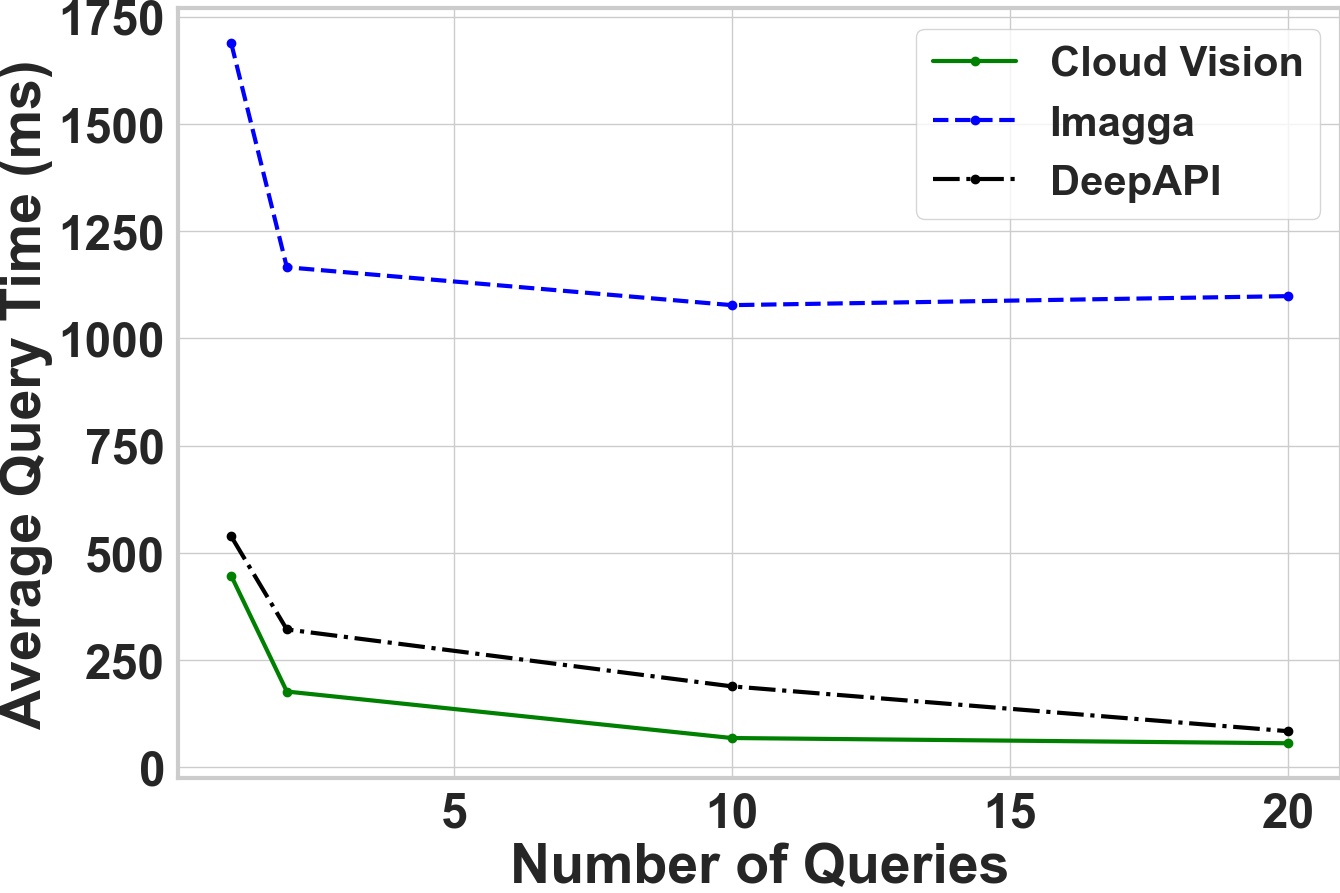

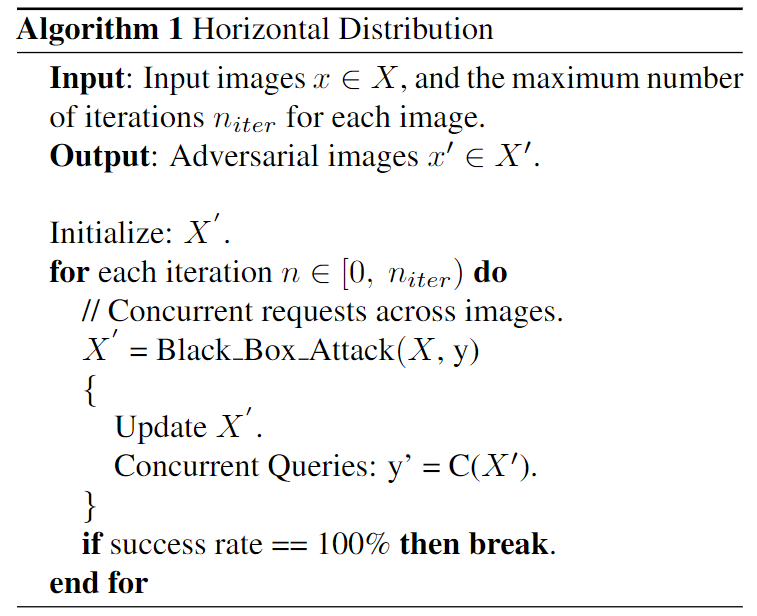

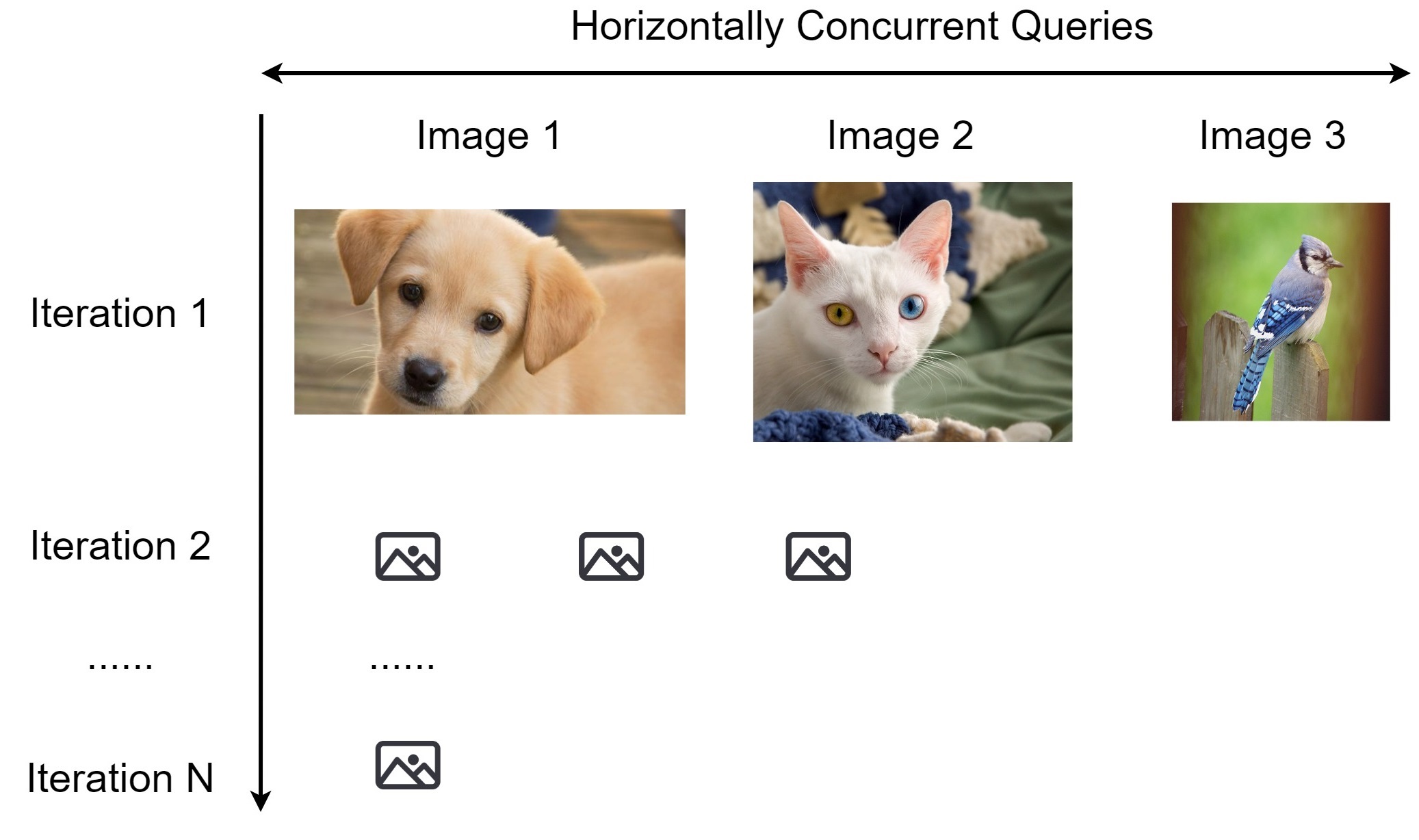

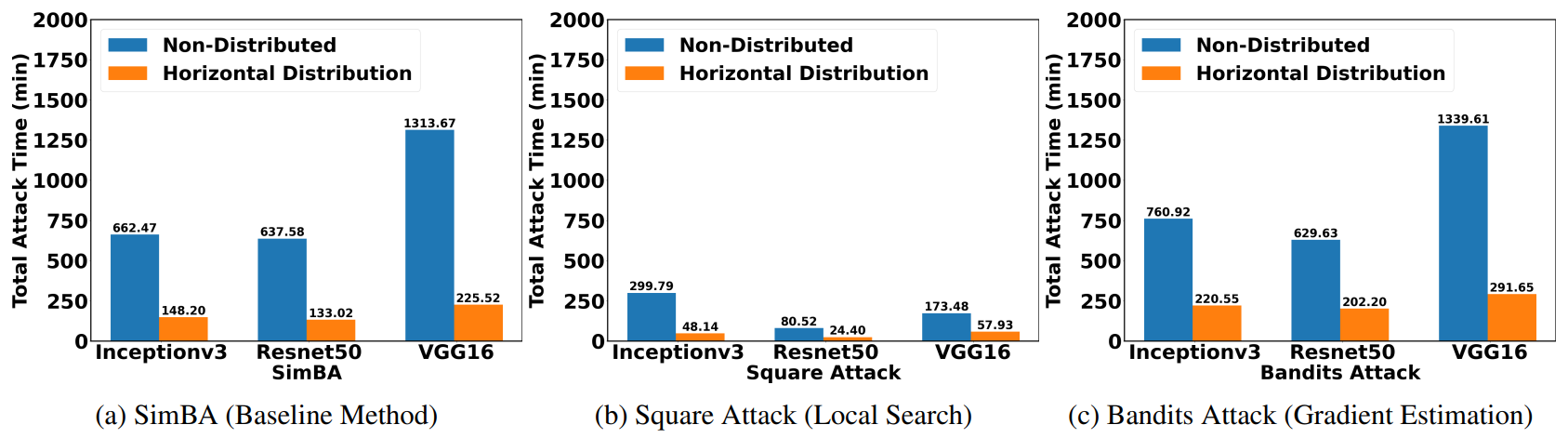

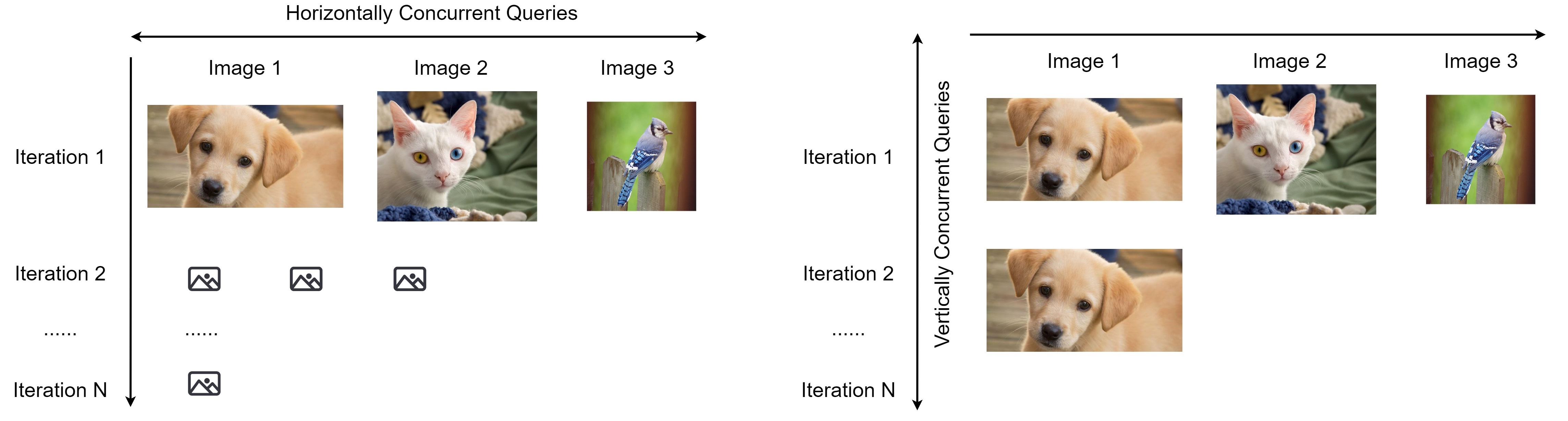

Horizontal Distribution

Horizontal distribution reduces the total attack time by a factor of five.

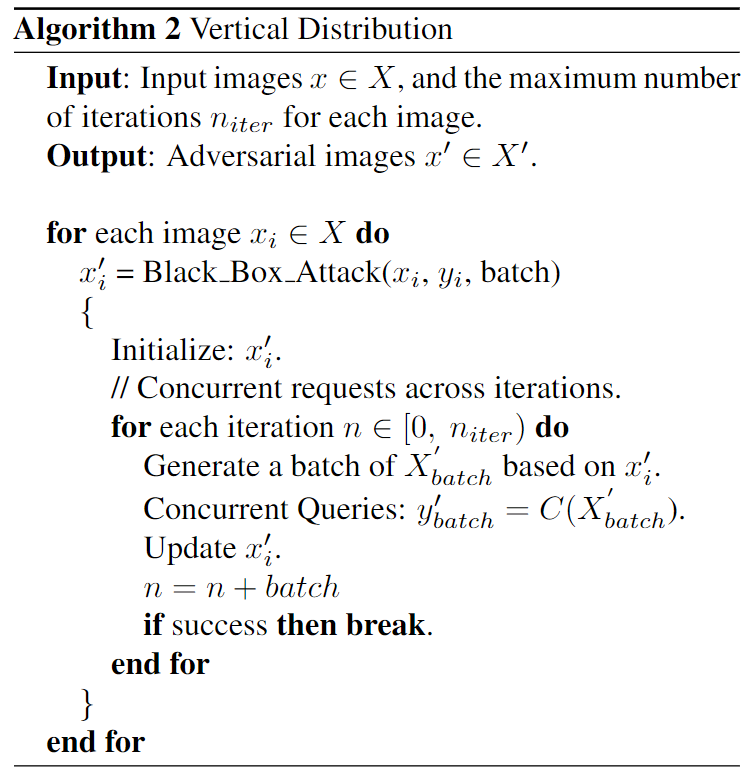

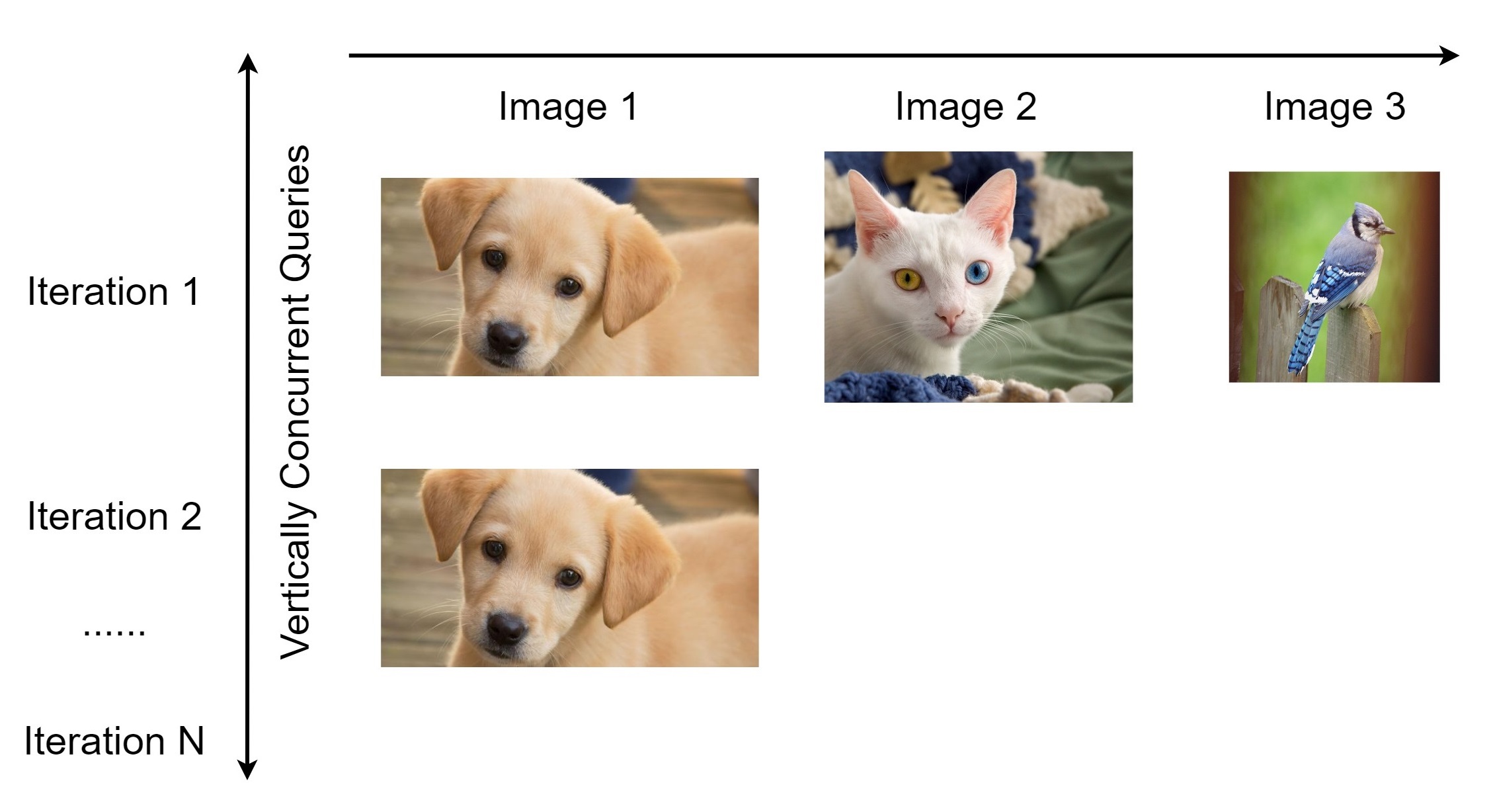

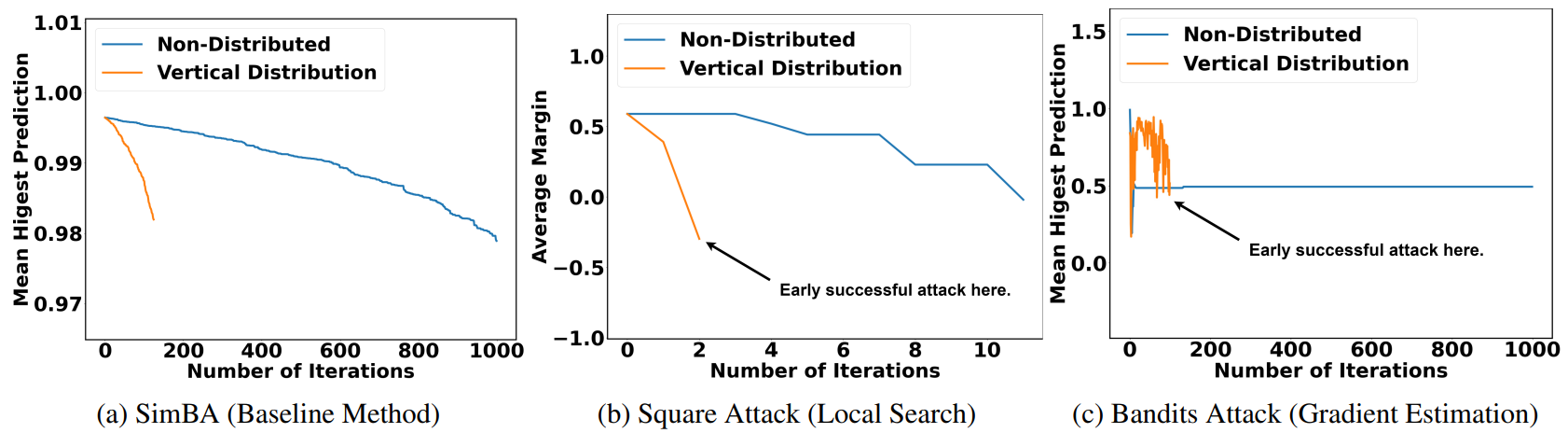

Vertical Distribution

Vertical distribution achieves succeesful attacks much earlier.

Conclusion

Thanks

Source Code